Building Trust in AI Responses: Our AI Fact Checker Design for Anthropic

Project

Anthropic | AI Fact Checker – Design Challenge

Role

Product Design | Research, Design Thinking, Rapid Prototyping, End-To-End Design

Team

Me, Co-designer Pania

Tools

Figma, Claude, Figma Slides

Summary

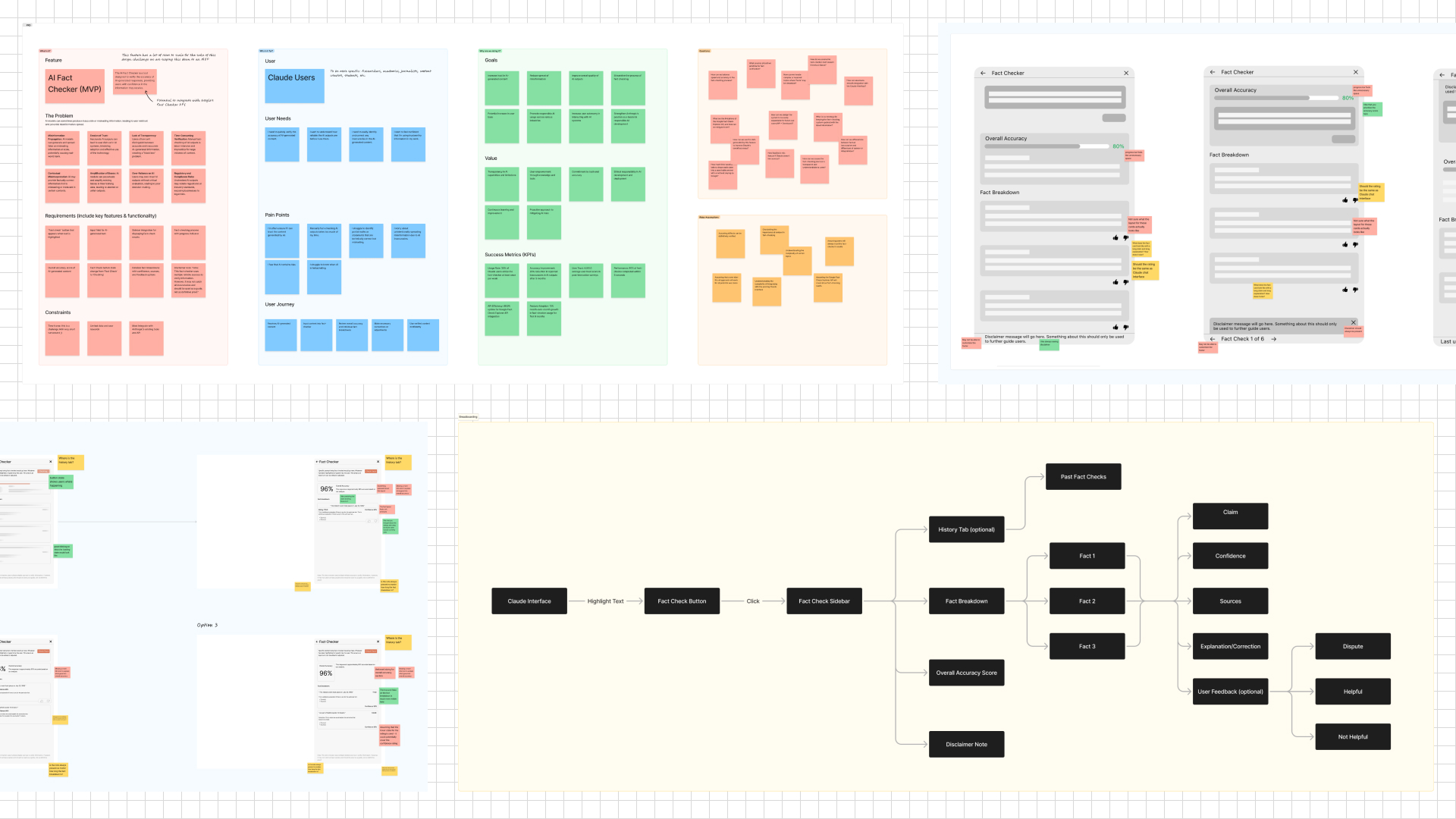

Pania and I applied as a mini-design team for a Product Designer role at Anthropic. As a follow-up to our dual application, we took on a 72-hour end-to-end design challenge to showcase our skills. We designed an AI fact-checker for Claude to help users verify responses, aligning with Anthropic’s focus on ethical AI and user trust.

Hours

Designers

User Personas

Prototype

The Problem

With AI models like Claude generating responses, one of the major user concerns is the accuracy of the information provided. Users often don’t have a straightforward way to verify facts, which could lead to misinformation. We aimed to create a solution that would enhance user trust by offering a tool to fact-check AI-generated content quickly and efficiently.

Strategy

Given the limited time, we focused on rapid research and ideation. We started by identifying key user pain points, including the need for an easy way to verify AI-generated information. From there, we created user personas and journey maps to ensure we had a deep understanding of user needs. We rapidly developed low-fidelity wireframes, which we reviewed and refined into high-fidelity designs and prototypes. Finally, we designed a presentation slide deck to showcase our solution for Anthropic.

Goals

- Design a feature to verify the accuracy of AI-generated content in Claude.

- Ensure the feature is easy to use and seamlessly integrates into Claude’s existing interface.

- Empower users by providing them with transparent, trustworthy information from AI responses.

- Align the solution with Anthropic’s commitment to ethical AI and user safety.

- Develop a feature that could potentially scale and be adaptable for future enhancements.

Constraints

- Real-time fact-checking: The solution would need to work quickly and accurately, requiring reliable external APIs or in-house solutions.

- Seamless integration: The fact-checker tool needed to fit smoothly into Claude’s current interface without adding complexity for the user.

- Scalability: Ensuring that the solution could grow and adapt with future developments, both in AI capabilities and user needs.

- Time limitations: Working within a 72-hour timeframe limited our ability to collect real user data and test the solution.

Solution

We conceptualized and designed an AI fact-checker specifically for Claude, the AI model by Anthropic. Our solution allows users to quickly and easily verify the accuracy of AI responses, providing them with the confidence to rely on the information they receive. This feature was designed with Anthropic’s values of ethical AI and safety in mind, empowering users to make informed decisions by giving them control over the verification process.

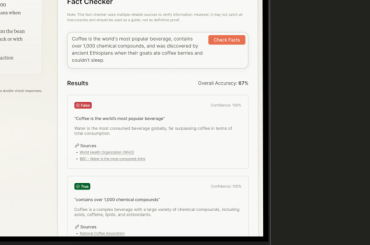

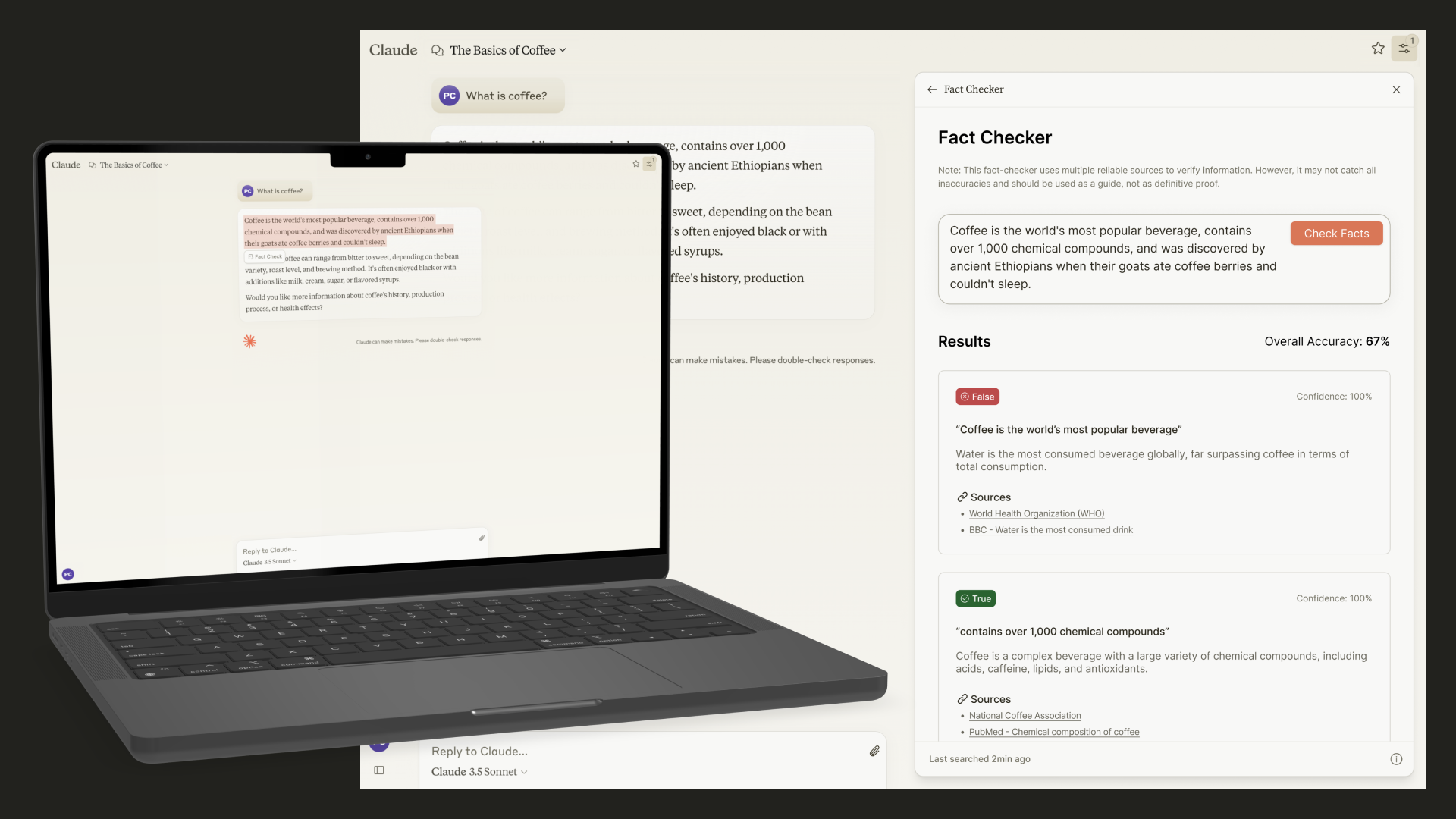

Here’s how the fact-checker works: Users simply highlight the portion of Claude’s response they wish to verify and click the “Fact Check” button. This triggers a sidebar that displays an overall accuracy score for the highlighted text. The sidebar also breaks down each fact, marking it as true, false, or partially true, and providing a summary for each. If any part of the response is identified as false, the tool suggests corrections, guiding users toward more accurate information. This seamless, intuitive workflow helps ensure that users can confidently interact with AI-generated content.

Here's how we tackled the challenge:

- Identifying User Needs: We conducted rapid research to understand user pain points, specifically around verifying AI-generated information and the frustration of not knowing whether they could trust the content.

- Persona Development: We created user personas to visualize the typical Claude user, ensuring that the fact-checker feature addressed their specific needs.

- Rapid Prototyping: Given the 72-hour timeframe, we designed and iterated quickly, developing low-fidelity wireframes and refining them into high-fidelity designs based on real-time feedback.

- Focus on Seamless Integration: We prioritized designing a feature that could easily integrate into Claude’s existing interface without disrupting the user experience.

- Emphasizing Trust and Transparency: The solution was designed with the goal of fostering user trust by providing clear, transparent breakdowns of the fact-check results.

- Scalability Considerations: We also kept scalability in mind, ensuring the feature could potentially leverage external APIs like Google’s Fact Check Explorer for real-time fact verification.

Results & Impact

Given that this was a design challenge, we couldn’t gather data from real users or fully assess the feature’s potential impact. However, we believe the solution had strong potential to meet user needs while aligning with Anthropic’s commitment to AI safety and ethics. Despite the lack of user testing, the project demonstrated our ability to think critically and design effectively under tight deadlines.

Potential Impact

- Increased User Trust: Offering real-time fact verification would give users greater confidence in Claude’s responses, fostering trust in the AI’s reliability.

- Combatting Misinformation: By actively validating AI-generated content, this tool could help prevent the spread of misinformation, leading to a more accurate and informed digital space.

- Increased AI Awareness: Users would develop a deeper understanding of how AI works, enhancing their awareness of its strengths and limitations, and encouraging more informed interactions.

- Efficient Workflows: For professionals and researchers, this tool would streamline workflows by reducing the time needed for manual fact-checking, increasing productivity.

- Promoting Ethical AI: The fact-checker encourages responsible AI use by prioritizing transparency, and aligning with broader ethical standards across industries.

- Competitive Advantage: A built-in fact-checking feature could differentiate Claude from other AI models, positioning it as a more trustworthy and reliable tool in the market.

What's Next...

To further develop this solution, our first step would be to conduct user research with real Claude users, gathering feedback and making refinements based on their insights. We’d also need to evaluate the technical feasibility of relying on an external fact-checking API or developing an in-house solution. Additionally, creating a roadmap for scaling this feature would be essential, as it has significant potential to enhance user experience and trust.

/Fin

This project gave Pania and me a chance to showcase our ability to solve complex AI challenges under tight deadlines while also being in a high-pressure environment. One key takeaway is the importance of user research, which would be our priority if it wasn’t that this was a design challenge. We also recognized the need for strategic planning around integrating new features into existing systems, particularly in AI-driven applications. This challenge reaffirmed our commitment to designing with purpose, always keeping user trust and safety at the forefront.